AI vs. Human Creativity: Evaluating What Drives Business Innovation

The State of Industrial AI - E82

Recent advancements in Artificial Intelligence (AI), particularly in Large Language Models (LLMs) like ChatGPT and Gemini Pro 1.5, have significantly enhanced AI capabilities in mimicking human creativity. A study published in Nature reveals that people often cannot distinguish between poetry written by AI and that penned by renowned poets like Shakespeare. This finding not only challenges our perceptions of creativity but also raises important questions about AI limitations, AI misconceptions, and the influence of AI training processes on human preferences.

AI’s Growing Role in Creativity and the Impact of Reinforcement Learning

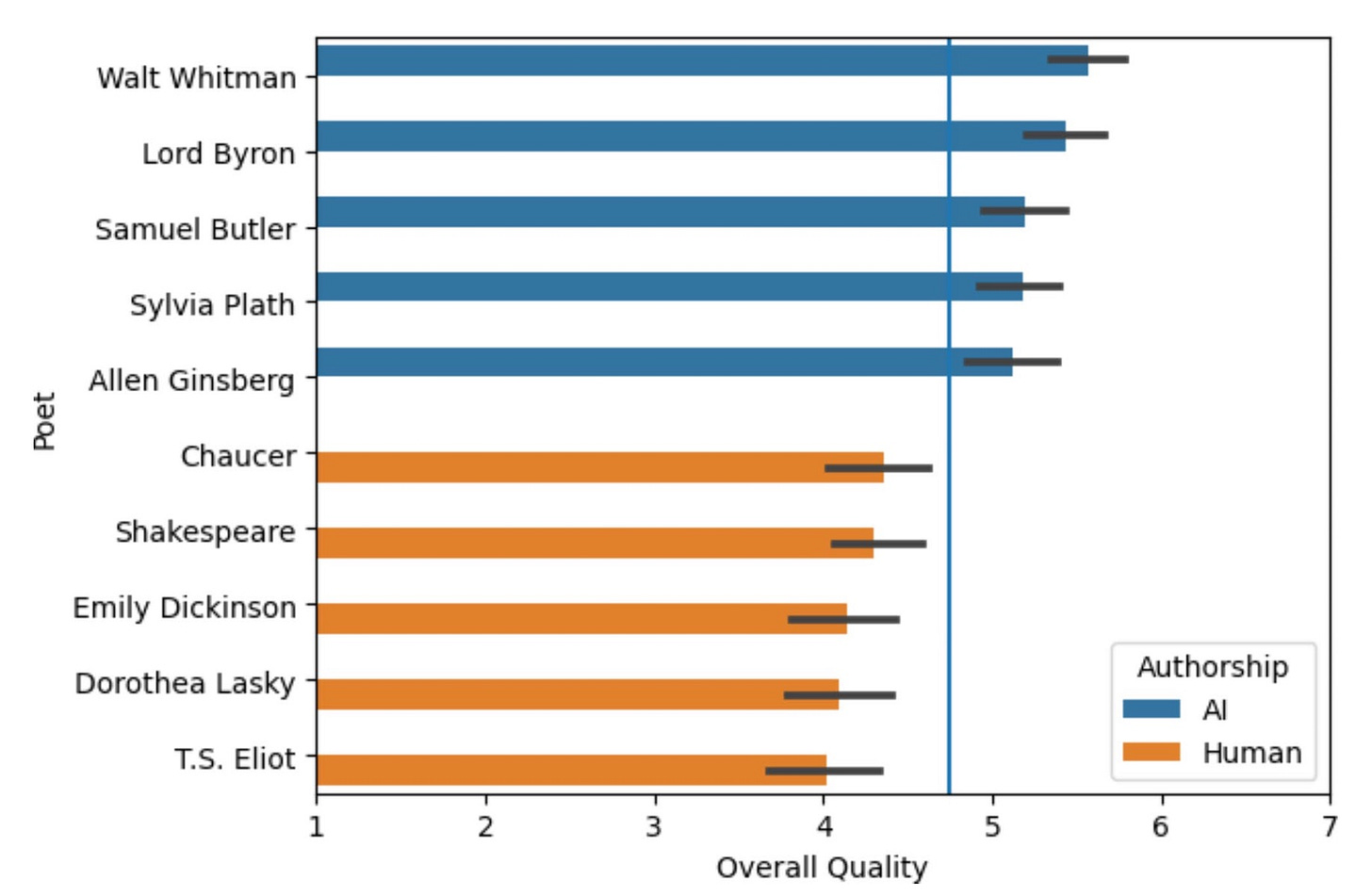

The study highlighted by The Telegraph involved participants evaluating poems without knowing their origins. Surprisingly, many preferred the AI-generated verses over those written by human poets. However, this preference may not indicate that AI inherently produces superior content. Instead, it reflects how reinforcement learning in AI training processes biases AI outputs toward content that is immediately appealing but not necessarily more correct, brilliant, unique, or authentic.

Modern AI models like ChatGPT are fine-tuned using Reinforcement Learning from Human Feedback (RLHF). In this process, humans review and rank AI-generated outputs, and the AI adjusts its responses to align with these preferences. This method encourages the AI to produce content that is more likely to be favored by evaluators, often prioritizing simplicity and familiarity over complexity and originality. Consequently, while the AI-generated poems may be more accessible, they might lack the depth and innovation found in human-created works that explore complex and novel ideas.

By adjusting parameters like the temperature setting—a concept in AI experimentation—users can influence the creativity and unpredictability of the AI’s outputs. However, the underlying AI prompting techniques and reinforcement learning mechanisms still guide the AI toward generating content that aligns with established human preferences, potentially limiting the emergence of truly innovative or groundbreaking ideas.

Human Judgment, Bias, and the Limitations of Reinforcement Learning

An intriguing aspect of the Nature study is that participants tended to prefer the simplicity and immediacy of AI-generated poetry. This preference highlights a potential limitation in AI training processes: by optimizing for content that receives positive feedback, AI models may inadvertently suppress outputs that are more complex or challenge conventional thinking. This bias toward the familiar can hinder the AI’s ability to produce content that is more correct, brilliant, or unique, especially when dealing with complex and new concepts.

When participants were informed that the poems were AI-generated, their evaluations became more negative, a phenomenon reflecting algorithmic aversion and highlighting the impact of AI misconceptions on AI and human judgment. Moreover, the study notes that when a human is involved in selecting the best AI-generated poems—a process known as having a “human in the loop”—the selected pieces perform even better with other humans.

Which underlines the potentially hidden danger of RLHF. Similar to how social media platforms tend to push users towards polarizing content and reinforce simple messaging over complex thoughts, we could see similar unintended side effects emerge from LLMs.

The Coca-Cola AI-Generated Ad Controversy and Perceptions of Authenticity

The bias against AI-generated content extends into visual media, as seen with Coca-Cola’s recent AI-generated holiday commercial. The ad sparked significant backlash online, with a tweet criticizing the ad receiving over 58,000 likes. Critics expressed concerns that relying on AI diminishes the authenticity and creative depth of advertising content. Reports from Mashable and The Independent highlight public apprehension about AI risks and the potential loss of human touch in creative works.

It seems like there remains a strong desire for authenticity and originality that AI may struggle to replicate. The reliance on AI’s learned biases toward likability may result in content that lacks the innovation and uniqueness that come from human creativity, especially when exploring complex or novel ideas.

The Tug of War Over AI Labeling and the Future of Creativity

As AI continues to produce content that closely mirrors human creations, there’s a growing debate over labeling AI-generated works. Creatives and labor advocates argue for transparency to protect the value of human work and to allow audiences to make informed choices. Businesses may resist such labeling to avoid potential bias against AI-produced content.

The core issue revolves around whether the preference for AI-generated content is genuine or a product of AI’s training processes that favor immediate appeal over depth and originality. As AI models become more adept at predicting and replicating human preferences through reinforcement learning, they may inadvertently discourage the creation of content that is complex, innovative, or challenges the status quo. The reliance on AI-generated content optimized for likability may lead to a saturation of similar ideas, reducing diversity in thought and expression.

This dynamic necessitates a reevaluation of AI use cases and underscores the importance of maintaining not only humans but experts in the loop to ensure that creativity continues to evolve. This will be especially true By recognizing the limitations imposed by reinforcement learning biases, we can better understand the areas where AI excels and where human ingenuity remains irreplaceable.

Implications for Industrial Companies: The Critical Role of Experts

For industrial companies, these developments underscore the necessity of integrating human-in-the-loop, especially expert-in-the-loop, approaches in deploying AI systems. It becomes critical to establish objective rules for evaluating AI-generated outputs and to disclose these evaluation criteria to users of the models. Since two experts might assess a highly creative solution differently—potentially influenced by their own biases—having transparent, standardized evaluation frameworks is essential. This ensures that the true quality of AI-generated responses is accurately assessed, beyond subjective preferences.

We anticipate a world where companies with substantial expert resources can develop their own in-house specialized models finetuned to specific applications. These bespoke models, refined by domain experts, will be better equipped to meet specialized needs and could compete effectively with models from other companies. By leveraging their expertise, these organizations can mitigate the limitations imposed by generalized reinforcement learning biases and produce AI solutions that are not only innovative but also aligned with industry-specific standards and objectives.